Performance Evaluation of Real-time Speech Through a Packet Network: a Random Neural Networks-based Approach

Performance Evaluation of Real-time Speech Through a Packet Network: a Random Neural Networks-based Approach

Abstract

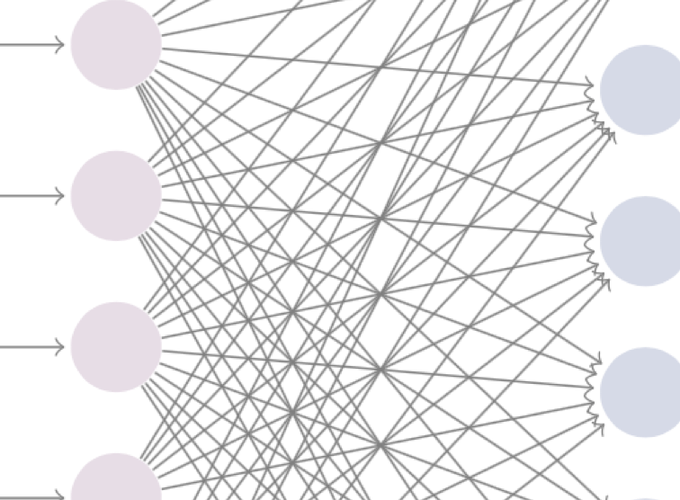

This paper addresses the problem of quantitatively evaluating the quality of a speech stream transported over the Internet as perceived by the end-user. We propose an approach being able to perform this task automatically and, if necessary, in real time. Our method is based on using G-networks (open networks of queues with positive and negative customers) as Neural Networks (in this case, they are called Random Neural Networks) to learn, in some sense, how humans react vis-à-vis a speech signal that has been distorted by encoding and transmission impairments. This can be used for control purposes, for pricing applications, etc. Our method allows us to study the impact of several source and network parameters on the quality, which appears to be new (previous work analyzes the effect of one or two selected parameters only). In this paper, we use our technique to study the impact on performance of several basic source and network parameters on a non-interactive speech flow, namely loss rate, loss distribution, codec, forward error correction, and packetization interval, all at the same time. This is important because speech/audio quality is affected by several parameters whose combined effect is neither well identified nor understood